- Blog

- Gemini 3 Flash: Frontier Intelligence Built for Speed

Gemini 3 Flash: Frontier Intelligence Built for Speed

Gemini 3 Flash marks a pivotal moment in the speed–intelligence tradeoff: a frontier‑level model that delivers pro‑grade reasoning at flash‑level latency and a lower cost. It’s now the default model in the Gemini app and AI Mode in Search, and developers can access it across Google’s ecosystem from the Gemini API to Vertex AI.

Table of Contents

- Gemini 3 Flash at a glance

- Speed, intelligence, and the Pareto frontier

- Built for developers: coding, tools, and multimodal

- For everyone: default in the Gemini app and Search

- Access and pricing

- FAQ

- Conclusion

Gemini 3 Flash at a glance

Gemini 3 Flash is designed for speed without sacrificing intelligence. Headline highlights:

- Frontier reasoning performance on PhD‑level benchmarks: GPQA Diamond 90.4%, Humanity’s Last Exam 33.7% (without tools). State‑of‑the‑art 81.2% on MMMU Pro, comparable to Gemini 3 Pro.

- Faster and more efficient than earlier models: 3× faster than Gemini 2.5 Pro (per Artificial Analysis), and uses about 30% fewer tokens on average on typical traffic while maintaining higher task performance.

- Optimized for interactive apps, coding, complex analysis, and multimodal understanding.

- Default in the Gemini app and AI Mode in Search, bringing frontier AI to everyday users at no cost.

- Developer access across Google AI Studio, Google Antigravity, Gemini CLI, Android Studio, Vertex AI, and Gemini Enterprise.

Why this matters

The Flash series has been synonymous with responsiveness. Gemini 3 Flash extends that lineage by meeting frontier benchmarks while pushing cost and latency down — a combination that unlocks near‑real‑time assistants, design‑to‑code workflows, and production‑grade coding agents.

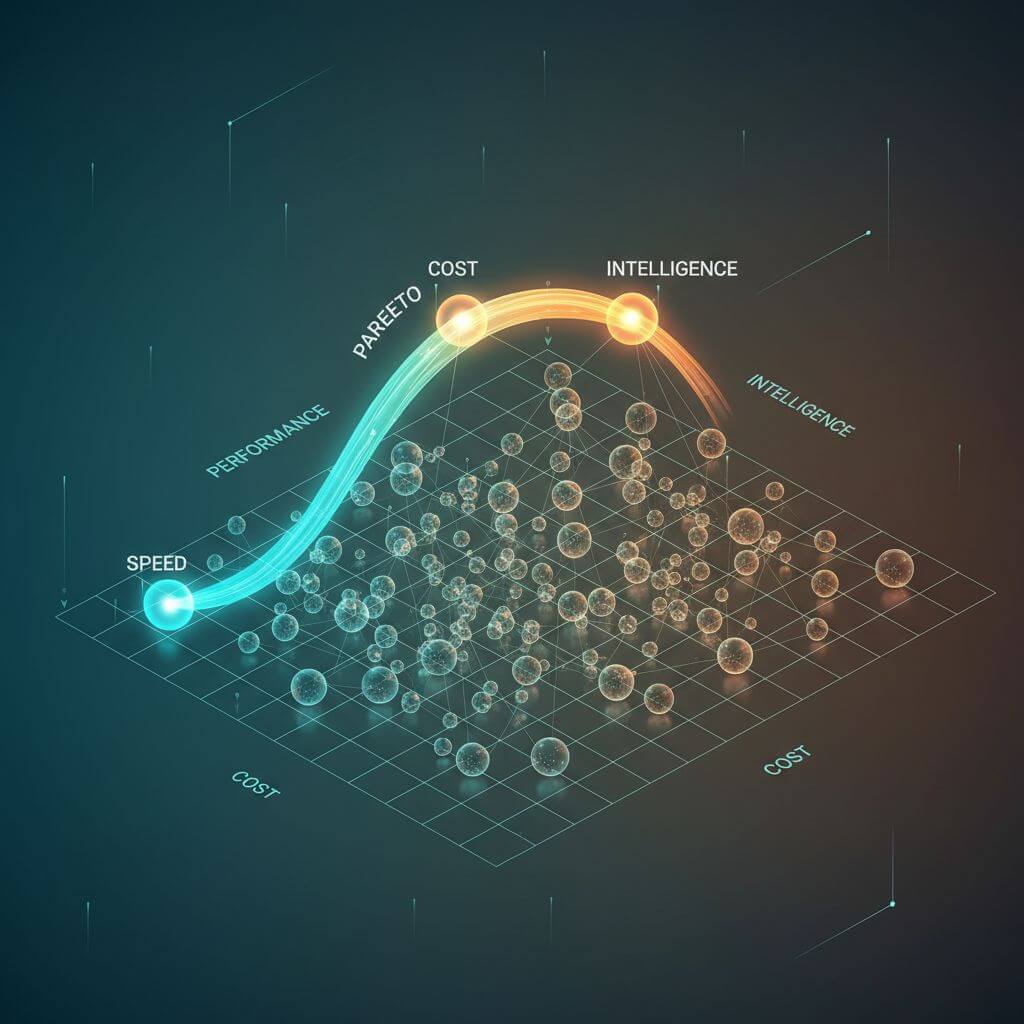

Speed, intelligence, and the Pareto frontier

Gemini 3 Flash shows that speed and scale need not trade away reasoning. Its LMArena Elo vs. price position indicates a strong Pareto frontier: more performance for less cost and latency. Crucially, the model can modulate how much it thinks. For everyday tasks it reaches accurate outputs with fewer tokens, and it extends its “thinking” depth on complex prompts.

Key efficiency notes

- Token efficiency: ~30% fewer tokens than Gemini 2.5 Pro on typical traffic for everyday tasks.

- Latency: 3× faster than 2.5 Pro in benchmarking, enabling snappier UX in iterative workflows.

- Cost: $0.50 per 1M input tokens and $3 per 1M output tokens (audio input $1 per 1M input tokens).

Built for developers: coding, tools, and multimodal

Gemini 3 Flash brings pro‑grade coding performance with low latency — an ideal fit for agentic coding, production systems, and responsive interactive apps.

Coding performance and agents

- On SWE‑bench Verified, Gemini 3 Flash scores 78%, surpassing the 2.5 series and even Gemini 3 Pro in this benchmark.

- Iterative development flows benefit from near‑real‑time turnarounds, keeping agents responsive and productive.

Multimodal reasoning in practice

- Video analysis, data extraction, and visual Q&A run with strong reasoning and tool‑use capabilities.

- Real‑time UX demos highlight in‑game assistants, on‑the‑fly A/B tests, and multi‑step design‑to‑code pipelines.

- The model can generate multiple design variants from a single instruction and analyze context‑rich images to turn static visuals into interactive experiences.

Enterprise adoption

Early adopters (e.g., JetBrains, Bridgewater Associates, Figma, and others) report that Gemini 3 Flash’s inference speed, efficiency, and reasoning perform on par with larger models — a strong signal for production viability.

For everyone: default in the Gemini app and Search

Gemini 3 Flash is rolling out globally as the default in the Gemini app and AI Mode in Search, bringing next‑generation intelligence to everyday tasks.

What you can do

- Understand short videos and images in seconds — for example, analyze a golf swing and get a practice plan.

- Sketch and get live recognition while you draw — optimized for speed to keep up with your motion.

- Upload audio and get personalized learning: identify knowledge gaps, generate a custom quiz, and receive detailed explanations.

- Build quick apps with your voice — dictate unstructured ideas and watch them become a working prototype in minutes.

Search, upgraded with reasoning

AI Mode with Gemini 3 Flash builds on Gemini 3 Pro’s reasoning, parsing nuanced questions and assembling visually digestible, comprehensive answers. It pulls real‑time local information and links to help you go from research to action at the speed of Search — ideal for last‑minute trip planning or learning complex concepts quickly.

Access and pricing

- Availability: Gemini API (Google AI Studio), Google Antigravity, Gemini CLI, Android Studio, Vertex AI, and Gemini Enterprise.

- Pricing: $0.50 per 1M input tokens; $3 per 1M output tokens; audio input $1 per 1M input tokens.

- Default for consumers: rolling out globally in the Gemini app and AI Mode in Search at no cost.

FAQ

How is Gemini 3 Flash different from Gemini 2.5 Pro and Gemini 3 Pro?

Gemini 3 Flash offers frontier‑level reasoning with significantly lower latency and cost. It outperforms 2.5 Pro on many benchmarks and is 3× faster in tests. On MMMU Pro, it reaches 81.2%, comparable to Gemini 3 Pro, while targeting speed‑first applications.

What does “modulate how much it thinks” mean in practice?

The model dynamically adjusts its reasoning depth: it can think longer for complex tasks, and for everyday use it stays concise — helping reduce tokens and latency without sacrificing accuracy.

Is Gemini 3 Flash suited for multimodal tasks?

Yes. It’s designed for video understanding, image analysis with contextual overlays, and visual Q&A, enabling experiences like in‑game assistants and rapid A/B testing.

Where can I try it and how much does it cost?

Developers can access it via the Gemini API in Google AI Studio and across Google’s developer tools and cloud platforms. Pricing is $0.50/1M input tokens and $3/1M output tokens (audio input $1/1M input tokens).

Is it available to consumers?

Yes. It’s rolling out globally as the default in the Gemini app and AI Mode in Search, bringing fast, high‑quality responses at no cost.

Conclusion

Gemini 3 Flash compresses frontier reasoning into a faster, more affordable package — a rare combination that benefits both developers and everyday users. By pushing the Pareto frontier on performance vs. cost and speed, it enables new classes of responsive apps, accelerates coding agents, and upgrades daily workflows with powerful multimodal understanding.

If you need to quickly convert web pages to formats like PDF or Markdown during your workflow, you can try URL to Any (https://urltoany.com).